Key basic technology of intelligent drone

Drone "vision" technology

One of the key technologies in the "smart" of drones is to enable drones to perceive the surrounding environment through machine vision and turn the results into data that is passed to other applications via the OS (operating system).

At present, the mainstream machine vision hardware technologies in the field of drones include binocular machine vision, infrared laser vision, and ultrasonic assisted detection.

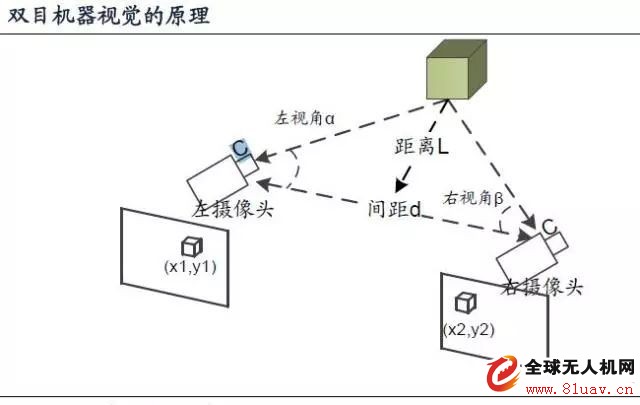

1, binocular machine vision

Binocular machine vision is based on the principle of triangulation, similar to the reduction principle of the human eye to the three-dimensional world. The distance is determined by comparing the difference in the angle of the same object in the images taken by two co-directional cameras, thereby restoring the three-dimensional world from the two-dimensional image. Stereoscopic model.

Binocular machine vision requires only two cameras, but requires a higher computing power.

The threshold for binocular machine vision is not to solve the distance L based on the angle of view information α, β and the spacing d, but to enable the computer to "extract" the object from the background in the picture. At present, Qualcomm's drone reference design using binocular machine vision uses the flagship chip Snapdragon 801/820, which shows that it has high computing power requirements.

It is natural for the human eye to distinguish an object from the background image, but it is different for the computer: the imaging of the same scene on the camera image plane of different viewpoints will be distorted and deformed to varying degrees. In order to let the computer blur out the object, the image segmentation algorithm needs to do large computational calculations such as convolution/differential; and the overall computing performance required by the UAV in real-time ranging is higher.

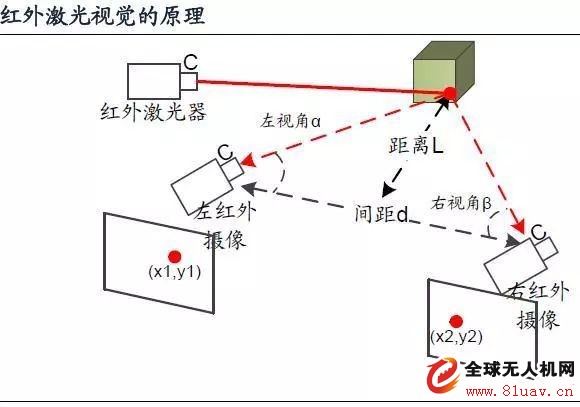

2, infrared laser vision

In order to avoid the large amount of calculations and improve the accuracy of identifying objects in computer vision, a group of manufacturers represented by Intel used infrared laser vision technology, such as Intel RealSense machine vision module. The basic principle is shown in the figure below. The principle of ranging is similar to that of binocular vision, but the recognition object is replaced by an infrared laser spot hitting the surface of the object. This fundamentally eliminates the computational requirements of object recognition.

The necessary cost of infrared laser vision is to replace the two cameras with an infrared camera and add the hardware cost and power consumption of an infrared laser scanner. The infrared laser scanner consists of an infrared laser emitter and a MEMS scanning mirror, and the overall increased hardware cost is high.

In addition to the small amount of computational requirements, infrared laser machine vision has two major advantages: Compared to binocular, its application time and scope is wider, can be used in dark nights and indoor lighting conditions; compared to binocular, it has Higher range accuracy, capable of accurately reducing 3D data of objects.

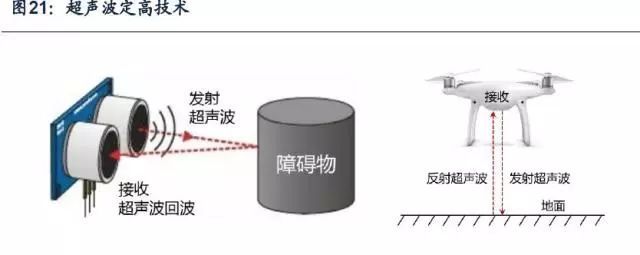

3, ultrasonic detection

Ultrasonic barrier testing is a relatively mature technology that has been widely used in military/civilian applications.

The advantage of ultrasound is that it can effectively identify objects such as glass, wires, etc. binocular vision/infrared laser vision cannot be prepared for distance measurement.

The disadvantage is that the accuracy is poor, and it can only be used to detect the existence of obstacles, and it is impossible to extract accurate spatial information for path planning.

Fixed-point hovering technology

The core application of consumer drones is based on the aerial photography capabilities of drones , while the aerial camera's most demanding technical specification for drone systems is flight stability.

There are several technical means used in hovering positioning technology:

1) GPS/IMU combined positioning

2) Ultrasonic assisted height setting

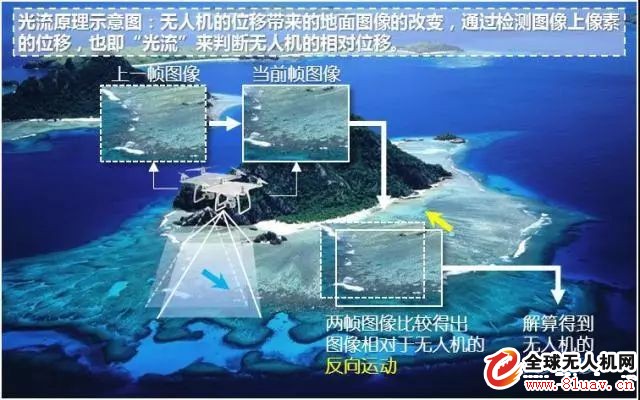

3) Image-based optical flow localization technology

GPS/IMU positioning technology

The principle of GPS/IMU positioning is a more traditional and mature positioning method.

GPS can measure the current horizontal position and height of the drone. The flight control system compensates the drone according to the position and height of the drone relative to the hover point to achieve fixed-point hover.

However, the GPS signal is updated slowly, and the GPS signal is easy to receive interference, which affects the actual control effect. Therefore, the engineering practice introduces the IMU information and GPS signal of the aircraft to filter, and obtains more accurate and newer position and height information. This mode can also ensure that the emergency position height information is provided only by the IMU when the GPS is malfunctioning. However, since the solution result is easy to diverge when only the IMU information is used for the positional height calculation, this method is only suitable for hovering control in an open outdoor environment, and is not suitable for use indoors or in a signal-shielded environment.

Ultrasonic assisted height setting

The ultrasonic distance measuring sensor is a relatively mature ranging sensor. It can measure the distance of obstacles in front of the ultrasonic sensor according to the time difference between the ultrasonic wave and the return. When the drone is equipped with the lower-view ultrasonic sensor, it can be measured. Accurate distance from the ground to assist in achieving constant height control, but ultrasonically assisted elevation does not contribute to drift control at horizontal positions.

Optical flow positioning

Optical flow positioning is a technique in which an image sensor is used to analyze an image captured by a sensor, and an indirect solution is obtained to obtain a position and motion information.

With the evolution of image processing algorithms and the development of image processing hardware platforms, the accuracy and real-time performance of such algorithms are guaranteed, which enables them to be applied in UAV systems.

Optical flow localization is to use the change of pixels in the image sequence in the time domain and the correlation between adjacent frames to find the correspondence between the previous frame and the current frame, thereby calculating the motion of the object between adjacent frames. A method of information. In general, the optical flow is due to the movement of the foreground object itself in the scene, the motion of the camera, or the joint motion of the two.

In the UAV application, the drone body is equipped with an optical flow camera to the ground, and the positioning is based on the observed ground image. The principle can be understood by the following figure: when the drone moves relative to the ground The picture taken by the ground observation lens will "move" in the opposite direction, according to the height of the drone from the ground (this is also the reason why the optical flow sensor is paired with the ultrasonic sensor to the ground) and the observation of the ground. The amount of pixel movement in the image can be used to derive the distance the drone moves relative to the ground.

When the drone adopts the optical flow positioning technology to realize its own position determination, the general control algorithm can be used to achieve the positioning on the water level and the height. At present, the optical flow technology can basically achieve a stable hovering of the indoor environment, but as time passes, there will still be a drift of a range of ten centimeters to several tens of centimeters. However, this low frequency, small amplitude position change is acceptable for aerial photography.

Tracking technology

For the aerial drone , a new trend is to adopt the tracking mode, that is, to set a point of interest for the drone, and the drone automatically tracks the point of interest, which is the development trend of the drone intelligent. .

The current tracking technology is mainly divided into two types:

1) GPS tracking;

2) Image tracking.

GPS tracking

The GPS tracking technology is relatively simple, that is, the tracked person needs to hold the remote controller and obtain the satellite positioning information of his current location, and then send the information to the drone, and the drone aims to receive the target position and navigate. .

GPS tracking is a relatively basic way of tracking, which is used by most drones on the market.

Image tracking (including face recognition tracking)

The image tracking technology is based on the image features of the set points of interest, and the tracking of the target is completed according to the image information. This involves image recognition and image tracking of the target object, especially in the target motion scene. In the case of a larger change in target form, the accurate tracking of the target needs to be applied to deep learning technology, which is a hot research direction of artificial intelligence.

Automatic obstacle avoidance technology

The flight safety of UAVs has always been a core issue related to the large-scale commercial application of UAVs. How to perceive obstacles and autonomous obstacle avoidance are the most advanced research topics in the field of UAV flight safety.

With the large-scale commercial application of autonomous flight and tracking flight of drones, the functional requirements of autonomous aircraft for autonomous obstacle avoidance in the process of autonomous aerial photography and follow-up have become more urgent.

At present, three different obstacle avoidance technologies are mainly used:

1) Obstacle avoidance based on ultrasonic detection;

2) Lidar-based barrier technology;

3) Realsense monocular + structured light detection to avoid obstacles.

Ultrasonic ranging obstacle avoidance

This technology is similar to the traditional reversing radar system. According to the ultrasonic detection, the obstacle distance information is obtained, and then the corresponding strategy is used to avoid the obstacle. The characteristic is that the detection distance is short and the detection range is small, but the method is very mature and easy to implement.

Binocular vision obstacle avoidance

This technology is based on binocular vision image depth reconstruction method. It reconstructs the depth of field in the field of view. The depth of field information is used to judge the obstacles in the field of view. The detection range is wider and the distance is farther. Higher sex, but technically difficult, and will be affected by changes in light intensity.

Lidar-based obstacle avoidance technology

This technology relies on the application of more laser radar technology on unmanned vehicles to scan the environment around the drone and map the map.

Realsense monocular + structured light detection obstacle avoidance

RealSense is a visual perception system previously released by Intel. It adopts the principle of "active stereo imaging", which imitates the "parallax principle" of the human eye. By playing a beam of infrared light, the left infrared sensor and the right infrared sensor are used to track the position of the light, and then the triangle positioning principle is used to calculate The "depth" information in the 3D image. With a depth sensor and a full 1080p color lens, it accurately recognizes gestures, facial features, foreground and background, allowing the device to understand human movements and emotions. According to data released by Intel, Realsense's effective ranging can reach 10 meters.

Wireless image transmission technology

One of the core technologies of drone aerial photography is wireless image transmission. The ability of transmission is an important factor in measuring the aerial photography capability of drones.

Drone aerial photography

The drone aerial photography technology can be easily split into 2 points according to the literal "UAV" + "Aeronautical Camera":

1, image capture technology, that is, imaging and image processing technology; such as the number of pixels, aperture size, etc., but there are many parameters affecting the image quality on the camera module: single pixel size, sensor technology, lens group technology, ISP technology, etc. .

2. UAV platform technology mainly refers to the fuselage control technology that provides a stable aerial photography environment for aerial photography.

Image capture technology: The current image capture solutions on the market are integrated applications of mainstream camera modules of several major brands. UAV manufacturers do not have much technical space in this respect, and because technology development has been compared Mature, the gap between different product plans is not large.

Unmanned aerial platform stability technology: refers to the technology related to the UAV's own flight technology in addition to flight navigation, control and other unmanned aerial vehicles. This technique is the most critical factor affecting image quality.

The significance of a stable shooting platform:

When shooting video, the jitter and tilt of the picture will seriously affect the smoothness and beauty of the picture;

When taking photos, especially in low light conditions, if the exposure time is long, the camera shake will cause blurring of the picture; if the exposure time is reduced, the sensitivity needs to be increased, the noise is increased, and the image quality is affected. Therefore, the stability of the fuselage is crucial for shooting.

The main factors affecting the stability of the fuselage:

According to the typical situation of the current four-rotor UAV, several factors that cause disturbance to the position and posture of the fuselage can be summarized as follows:

1. The horizontal position and the height of the hover caused by the inaccurate hovering positioning;

2. The body tilts and shakes when the body is actuated;

3. Disturbance caused by motor vibration, gusts, etc. For different types of disturbances, different strategies have been adopted on the drone system.

For horizontal and altitude drift, in the case of outdoor, that is, the GPS signal is good, the unmanned opportunity is mainly positioned according to the GPS signal. However, the limited accuracy of the civilian GPS system itself and the low update frequency are difficult to locate by relying solely on the GPS system. Usually, the UAV will also introduce an inertial module for combined positioning.

When indoors or GPS signal reception is limited, the drone system also uses the ground camera to perform optical flow positioning. Optical flow localization is an image-based positioning method that has emerged in recent years. When it is closer to the ground, the effect is good.

If the drift of the position is a slow dynamic disturbance, then the tilt and jitter of the body caused by the drone's maneuvering are high frequency disturbance factors, and the influence on the picture is very significant.

When the drone needs to move position, the attitude of the four-rotor body must be adjusted greatly, especially when the maneuver has just occurred, the body posture has a 40-degree adjustment.

For the body tilt caused by the movement of the fuselage in the horizontal direction, as well as the interference factors such as the jitter when the body is moving, the effect on the image capturing effect is large, and the impact must be offset by mounting the stable pan/tilt.

For motor vibration, gust disturbance and other factors, considering that it belongs to higher frequency disturbance, hollow rubber ball spring can be used for high frequency vibration filtering, which can achieve better results. For disturbances such as gusts, because of the large randomness of its form and size, it is difficult to ensure that the effects are completely eliminated. Only the combination of gimbal and optical flow can be considered to suppress its influence.

Finally, one technique that cannot be ignored is the electronic image stabilization technology. The electronic image stabilization technology uses the sensor to sense the motion of the body without the aid of mechanical equipment, so as to correct and trim the image on the display screen. From the software point of view, the image stabilization intention is realized to some extent.

PTZ technology

The Yuntai plays a major role in suppressing the active tilting and passive interference of the fuselage and other disturbances affecting the aerial photography effect.

Generally speaking, airborne heads are usually three-axis heads. As shown in the figure below, the “three axes†of the three-axis pan/tilt are divided into three axes of pitch, yaw and roll, also called three degrees of freedom, each with a motor for control. That is to say, the camera can realize the decoupling of three degrees of freedom from the drone (the three degrees of freedom of the drone: pitch, yaw, roll) by the control of the motor on the frame of the three-degree-of-freedom pan/tilt. It acts to isolate and counteract the effects of drone movement.

The three-axis gimbal technology mainly includes some contents: 1. Motion sensitivity; 2. Offset control.

Motion Sensitive: The camera part that needs to be installed in the innermost layer can sense the attitude deviation of the camera. A three-degree-of-freedom gyroscope is usually installed.

Offset control: When sensitive to the camera to deviate from the set attitude (generally the horizontal state), the reverse motion is applied by the motor to counteract the motion change.

From the above point of view, the accuracy and frequency of the sensor, as well as the accuracy of the motor output, the power level, and the performance of the control algorithm all have a relatively large impact on the final effect. However, from the current product technology, as long as the drone equipped with the three-axis pan/tilt has no major difference in the use of aerial photography.

From a functional point of view, several key factors are: 1. the isolation level between the gimbal and the fuselage; 2. the angular range controllable by the gimbal; 3. the speed of response; 4. the level of precision.

The importance of Yuntai to aerial photography

Displacement compensation: Even if a better GPS+ optical flow positioning technology is adopted, the drone will still have a large drift when positioning hovering, the amplitude is about 0.3m. When the displacement occurs, the center of the screen will be In order to further ensure the stability of the picture, it is necessary to introduce a mechanical head to stabilize the picture.

It can be seen from the simple geometric calculation that the horizontal drift has a great influence on the picture when the distance from the object to be photographed is relatively close. However, when it is far away from the subject, the effect is small. At this time, only the degree of the pan/tilt deflection is required to correct the screen offset, so that the object to be photographed returns to the center of the screen.

Attitude compensation: Compared to the movement of the drone's position, the disturbance of the drone's own attitude has a more severe impact on the picture. When the distance from the object being photographed is closer or farther, the influence is larger.

Ultra-remote control drone technology

You can sit in front of the computer and then let the drone move to another airspace or even the kingdom with just a click of the mouse.

The principle is actually very simple. The whole system needs to have two 4G access points, one on the drone and the other on the controller. The PC sends commands to the drone through the wireless network connection to control the flight path of the drone, and the unmanned person transmits the high-definition video captured by the built-in camera to the user, and the user can adjust the drone while monitoring the surrounding environment. Flight route.

Defender Armor Phone Case,Armor Phone Case,Best Defender Armor Phone Case,Defender Armor Phone Case For Sale

Guangzhou Jiaqi International Trade Co., Ltd , https://www.make-case.com